Let us know what type of content you'd like to see more of. Fill out our three question survey.

Unlocking the Potential of Generative AI in Cybersecurity: A Roadmap to Opportunities and Challenges

This post is one of a series of posts on Cyber Security.

Oct 5, 2023

This is the first in a series of blog posts about cybersecurity to mark Cybersecurity Awareness Month in October.

Generative artificial intelligence (AI) is a fascinating field that is taking the world by storm. It is a cutting-edge technology that allows algorithms to create new and unique data, which was unimaginable just a few years ago. While traditional AI models are designed to recognize and classify existing patterns, generative AI models are trained to generate new patterns and unique output data. With generative AI, the possibilities are endless—MidJourney and DALL-E create art, and ChatGPT writes compelling pieces of text that are nearly indistinguishable from those created by humans.

Imagine the potential of this technology in industries such as healthcare and cybersecurity, where it can be used to create new and innovative solutions to existing problems. The possibilities are truly endless, and we are only at the beginning of this exciting journey. However, despite offering exciting opportunities and applications in cybersecurity, generative AI comes with its challenges.

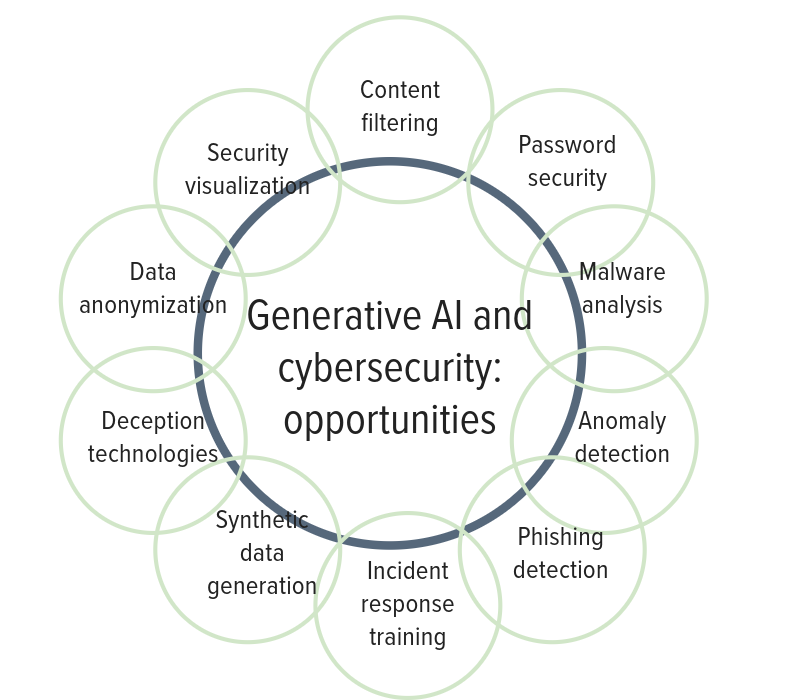

The Opportunities

Generative AI can be a game-changer in cybersecurity, offering tremendous opportunities to enhance security measures. Generative AI can detect potential threats and vulnerabilities by analyzing vast amounts of data while providing automated responses to attacks. Additionally, the technology can develop advanced authentication systems and simulate attack scenarios to test the effectiveness of security measures. To illustrate this, with the help of deep-learning models in natural language processing, AI-powered solutions can play a crucial role in preventing cybersecurity threats. By suggesting fixes for potential security flaws while developers are still writing the code, these solutions can help reduce the need for manual fixes later in the software development lifecycle, thus saving valuable time and resources.

Generative AI has already been implemented in several real-life cybersecurity scenarios, providing enhanced threat detection, automated response, advanced authentication, and improved security testing:

- Anomaly Detection: Generative models such as variational autoencoders (VAEs) have been used to detect anomalies in network traffic. By learning the normal patterns of traffic, VAEs can flag unusual behavior that might indicate a cyberattack. For instance, Darktrace’s Enterprise Immune System is an AI-powered cybersecurity solution that uses unsupervised machine learning to detect and respond to cyber threats. It can identify unusual behavior patterns within a network, such as an employee’s sudden access to sensitive data or a device connecting to the network for the first time. This allows it to detect threats that may have gone unnoticed by traditional security measures.

- Phishing Detection: Generative adversarial networks (GANs) have been used to generate and detect phishing websites. These models can create realistic phishing pages to test an organization’s defenses or identify phishing websites in real time. For instance, Google applied generative AI to improve email security. It used reinforcement learning to train its AI system to recognize phishing emails and protect Gmail users from potential threats.

- Malware Analysis: Generative AI generates variations of known malware samples. This helps cybersecurity researchers and analysts stay ahead of evolving malware threats by creating new signatures and improving detection capabilities. For instance, the Deep Instinct’s cybersecurity platform uses deep learning algorithms to identify and prevent cyber threats. The platform can analyze millions of files and data points in real time, identifying malware and other threats before they can cause damage.

- Password Security: Generative models can analyze patterns in passwords and generate recommendations for stronger, more secure passwords. They can also be used to identify weak passwords within an organization’s user base.

- Content Filtering: Generative AI, particularly in natural language processing, is used for content filtering to identify and block inappropriate or harmful content in emails, chat messages, or social media. For instance, IBM Watson for Cybersecurity is an AI-driven platform that uses natural language processing and machine learning to analyze security data. It can detect patterns that may not be visible to human analysts and identify potential threats and vulnerabilities. The platform also provides automated response recommendations, reducing the time needed to respond to an attack and minimizing the damage caused. In another example, various companies employed OpenAI’s GPT-3 for content filtering and moderation in chat applications, social media, and email to automatically detect and block harmful or inappropriate content.

- Incident Response Training: Simulating cyberattack scenarios using generative AI helps organizations train their incident response teams. These simulations provide a safe environment for practicing responses to different cyber threats.

- Synthetic Data Generation: Generative models can create synthetic datasets for training machine learning models in a controlled and privacy-preserving manner. This is useful for developing and testing security solutions without exposing sensitive data. Research institutions and organizations use generative AI to create synthetic datasets for cybersecurity research. These datasets allow researchers to test and evaluate security solutions without using real, potentially sensitive data.

- Deception Technologies: Generative AI can create decoy assets or honeypots within a network to attract attackers. Security teams can detect and respond to threats by monitoring interactions with these decoys early.

- Data Anonymization: Generative models can be used to anonymize sensitive data before it is shared or analyzed, ensuring that privacy regulations are followed while still allowing for meaningful analysis.

- Security Visualization: Generative AI can be employed to create visual representations of complex cybersecurity data, making it easier for security analysts to identify patterns and anomalies in large datasets.

These examples illustrate how generative AI can enhance cybersecurity by improving threat detection, facilitating training, and aiding in various aspects of security operations. However, it’s crucial to carefully implement and monitor these AI solutions to address potential challenges and ensure their effectiveness.

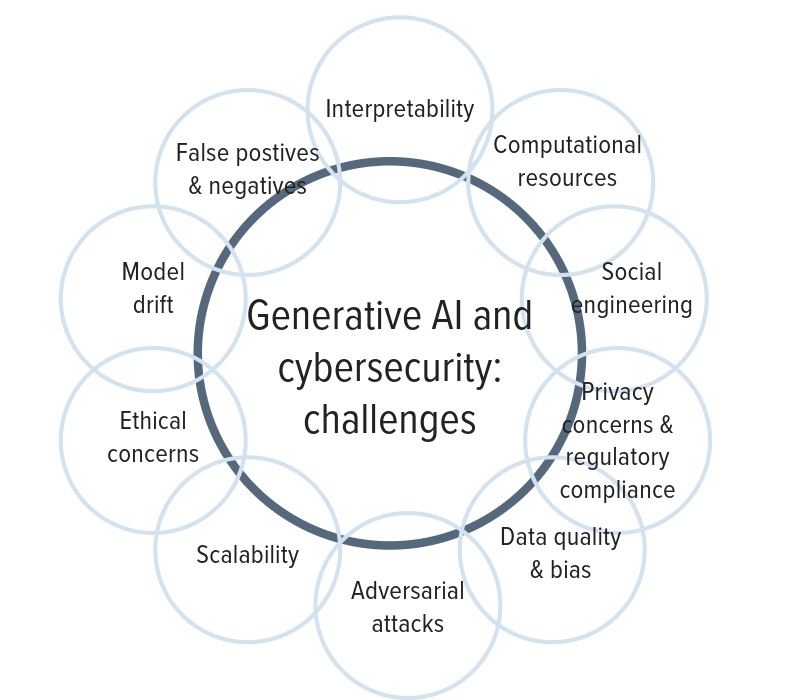

The Challenges

Despite all the exciting opportunities and applications, generative AI comes with challenges, such as ethical concerns regarding privacy and bias, lack of transparency, resource intensity, and false positives. To illustrate this, as the advancements in generative AI continue, cybercriminals have found a new tool to morph malicious code, making it harder to detect and defend against. This tool poses a greater threat to cybersecurity, calling for constantly innovating and evolving defense strategies to stay ahead of the cybercriminals. What’s more, the use of generative AI by government adversaries, such as self-evolving malware, can lead to a dangerous escalation of their capabilities, while also making it easier for hackers to gain access to sensitive information. Have you ever wondered how advanced AI has become in mimicking human speech patterns and linguistic tendencies? It’s almost frightening to think about the level of sophistication that generative AI has achieved in natural language processing. With such capabilities, it’s becoming increasingly difficult to tell the difference between humans and machines. All these challenges make it imperative that we remain vigilant and proactive in the face of this constantly evolving landscape of cybersecurity threats.

Generative AI in cybersecurity faces several key challenges:

- Adversarial Attacks: Generative models can be vulnerable to adversarial attacks, where slight input modifications can lead to incorrect outputs. Attackers can exploit this to evade detection.

- Data Quality and Bias: The effectiveness of generative AI heavily relies on the quality and diversity of the training data. Biased or incomplete data can lead to biased or inaccurate results of the AI model, potentially overlooking certain threats. It is crucial for organizations to exercise caution before adopting an AI solution, as an improperly trained AI model can potentially cause significant harm. The effectiveness of AI models depends on the quality of the data that fuels them—the more reliable the data, the more precise the outcomes.

- Privacy Concerns and Regulatory Compliance: Meeting regulatory requirements, such as the European Union General Data Protection Regulations (GDPR) or the United States Health Insurance Portability and Accountability Act (HIPAA), while using generative AI in cybersecurity can be a complex endeavor, especially when dealing with personal or sensitive data. Due to legal concerns, many governments around the globe have started curtailing the use of generative AI. For instance, the EU’s draft AI Act contains rules for general-purpose AI, or AI systems that may be deployed for a variety of tasks with various levels of risk. In another example, due to data protection and privacy concerns, the Italian data protection regulator, Garante, issued a temporary ban on ChatGPT.

- Social Engineering: Cybercriminals use advanced techniques such as generative AI to exploit people through social engineering, or malicious activities that trick people into divulging sensitive organizational information. With generative AI’s developing natural language processing capabilities, such social engineering attempts are becoming more streamlined and effective. What’s more, hackers can now attempt cyberattacks in languages they are not familiar with, thanks to AI’s ability to convert language into a form that would read as the product of a native speaker. It’s a scary thought, but it’s our reality today.

- Computational Resources: Training and running generative models can be computationally expensive, requiring significant hardware resources and energy, which may not be feasible for all organizations.

- Interpretability: Generative AI models often lack interpretability, making it challenging for security professionals to understand why a certain decision was made or to troubleshoot issues effectively.

- False Positives and Negatives: Generative AI, like all AI systems, can produce false positives and negatives. Overreliance on automated systems can lead to missing threats or wasting resources investigating false alarms.

- Model Drift: Generative models may become less effective over time as the threat landscape evolves, requiring constant monitoring and retraining.

- Ethical Concerns: Using generative AI in cybersecurity raises ethical questions, such as AI bias, discrimination, and violation of other human rights, such as privacy, freedom of expression, and access to information.

- Scalability: Implementing generative AI solutions across large and complex networks or systems can be challenging due to scalability issues. This can create information security vulnerabilities.

Addressing these challenges calls for a holistic approach that combines generative AI with other cybersecurity techniques and ongoing vigilance to stay ahead of evolving threats. Additionally, transparency, responsible AI practices, and ethical considerations should guide the deployment of generative AI in cybersecurity. It is essential that we use this technology ethically and transparently, tailoring it to the specific needs of each organization, context, and country. With generative AI on our side, we can stay one step ahead of cybercriminals and safeguard our digital future.